BLOG | September 26, 2023

Stop Bullsh*t Campaigns: Prove the real value of your campaigns with the right attribution setting

By Rodrigo Álvarez Farré – Performance Manager at Winclap

Welcome to Attribution

In the first place, we must define what we understand for attribution in performance marketing: “It is the act of determining what caused a user to install an app (or to perform post-install acts)”.

When we think about where an install can come from, we have 2 main possibilities:

- Installs attributed to Organic → There has not been interaction between the user and paid media.

- Installs attributed to Paid Media Sources (such as Google, Meta, TikTok, Liftoff, etc.)

But the tough question here is: how can we determine it?

Thanks to attribution models and MMPs. An attribution model is a set of rules that determine how credit for an event (the install) is assigned to touchpoints in conversion paths (from different media sources).

Luckily, for app campaigns we have MMPs (Media Measurement Partners), which are the judges here: they collect data from different media sources and then determine attribution to the right one, according to the attribution model rules.

How does it work, technically? The MMP gathers postbacks with data:

- From the publishers, where the user views or clicks an ad.

- From the Play Store, where the user installs the app.

- Then the MMP matches the data to determine which media source ads caused the user to install the app.

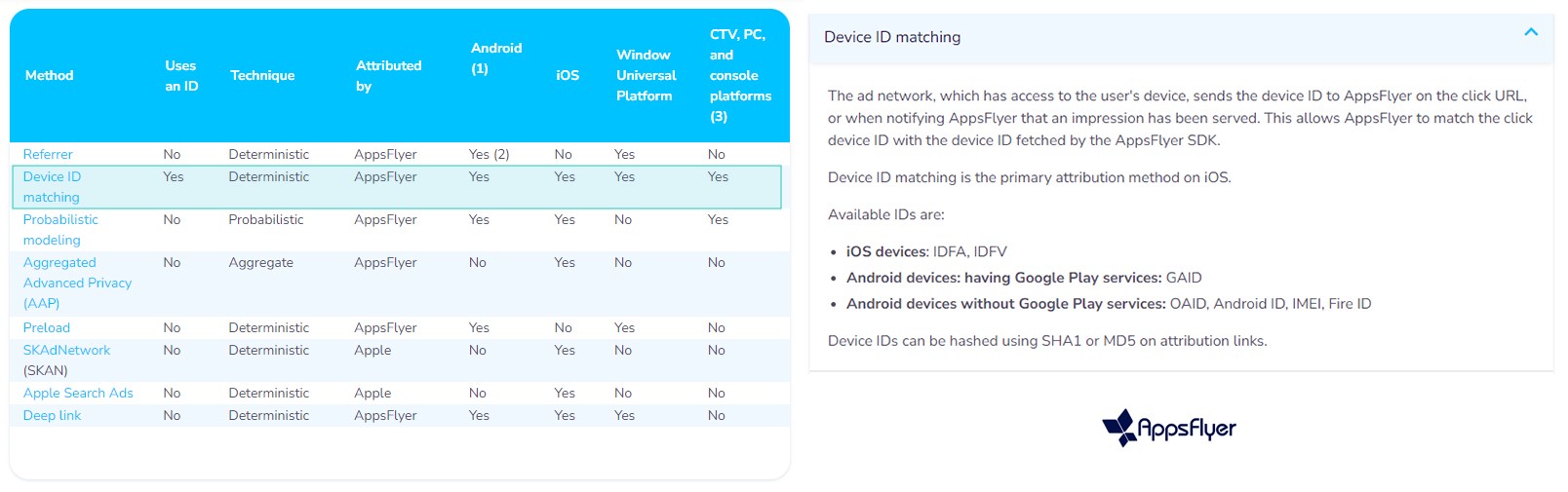

There are many different attribution models, and each one works with different rules. For app campaigns, it is common to use Device ID Matching.

Understanding the attribution model rules

We know that attribution is easier to check thanks to MMPs, which determines it based on the attribution model rules, but how do they work? For User Acquisition campaigns, we must set the Attribution Windows.

These attribution windows consist in:

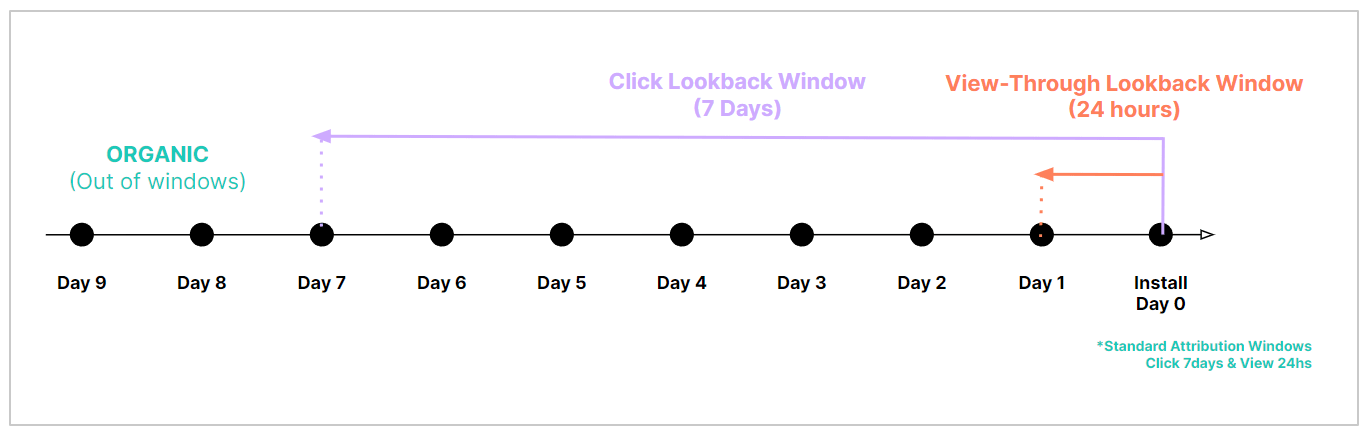

- Click Lookback Window → Period of time after an ad is clicked within which an install can be attributed to the ad. Industry Standard → 7 days. Min: 1 day, Max: 30 days.

- View-through Lookback Window → Period of time after an ad is viewed within which an install can be attributed to the ad. Industry Standard → 24 hours. Min: 1 hour, Max: 24 hours.

How does Attribution Lookback Windows work?

- Clicks always wins against impressions/views.

- If there are clicks into the lookback window, the last one wins.

- If there are no clicks into the window, an impression/view could win. In this case, it would win the last one into the View-Through Window.

- If there are no clicks or views into the windows, the install would be attributed to organic.

Attribution Windows (Lookback Windows)

- The model looks for CLICKS. Was there any click into the CLICK Window?

- YES → Install attributed to the last CLICK.

- NO → Step 2.

- The model looks for VIEWS/IMPRESSIONS. Was there any view into the VIEW Window?

- YES → Install attributed to the last VIEW.

- NO → Install comes from ORGANIC.

Here are 3 examples:

1. Stall would be attributed to the most recent click.

2. Install would be attributed to the last view.

3. Install would be attributed as “Organic”

How can we play with Attribution Windows and see more incrementality?

Once we understand how attribution works (MMPs, attribution model, lookback windows) we can now think: How does it impact incrementality? What can we do?

The industry standard is to set 7 days for a click to get an install and 24 hours for a view, but is that true for every case? Is it incremental? The key point here is that we can set up the attribution windows in a customizable way, according to what we consider incremental.

We can shorten (or expand) our attribution windows. That way, the number of installs attributed to paid media would be less (or more), as we are reducing (or increasing) the time that we consider paid media campaign touchpoints contribute to getting installs.

In order to define which time period we should set for each window, we can make a simple analysis based on historical data, see how many installs are coming by the day/hour (histogram), and analyze this behavior. Then, we could make a data-driven decision on reducing the time period and forecast how many attributed installs we would lose; and finally, get a more realistic CPI.

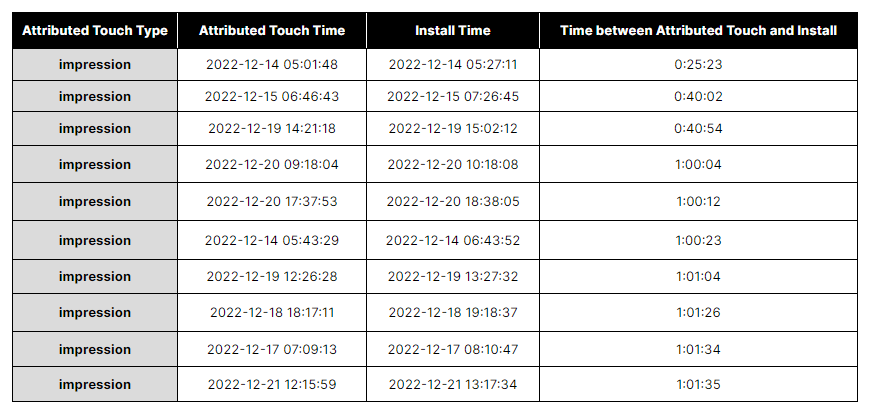

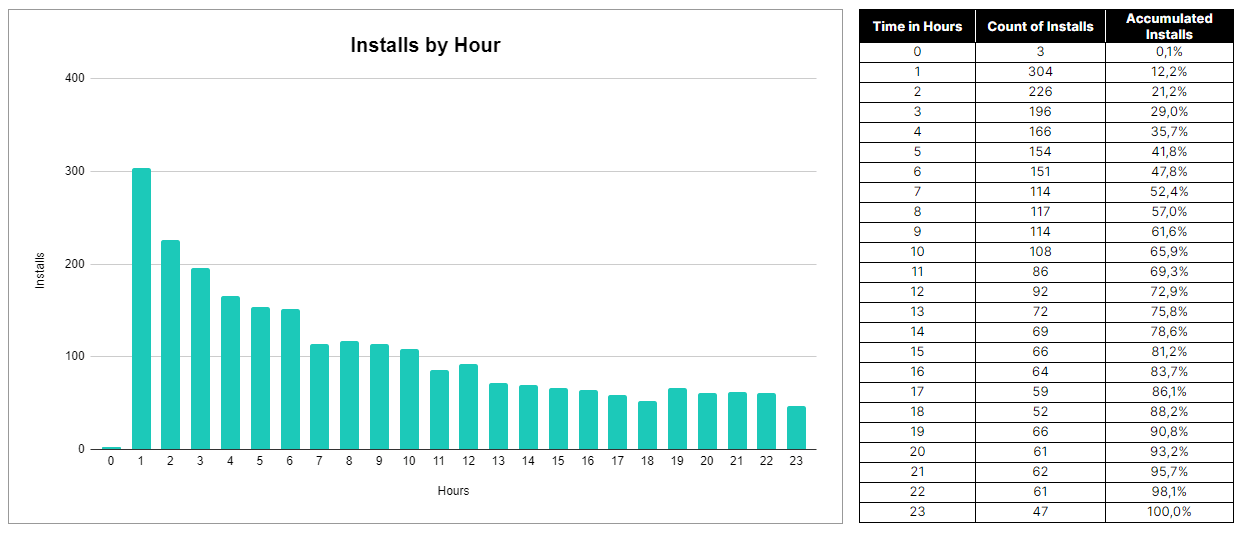

As an example, here we downloaded historical raw data from the MMP and calculated the time between touchpoints and installs, considering a previous View Lookback Window of 24 hours.

*With this kind of analysis we would only be able to forecast the impact of shortening the windows, but it's not possible to do it to increase (as we don't have data on what happens after the actual window time).

*With this kind of analysis we would only be able to forecast the impact of shortening the windows, but it's not possible to do it to increase (as we don't have data on what happens after the actual window time).

We can see how many installs are coming by every hour, during this 24 hours view-lookback window. Based on this information we could:

- Make a hypothesis on how our users decide to install our app after viewing an ad.

- Define how many hours we consider incremental for our view-lookback window.

- Forecast the number of installs that we would not attribute to paid media if we reduced the attribution window to fewer hours.

- Calculate the hypothetical CPI that we could expect to have if we shortened the window, assuming that it would be more realistic and incremental.

Another simple analysis that we could do is comparing the share of installs coming from post-click attribution and view-through attribution. We could consider post-click installs as more incremental, so if we saw many post-view installs we could be more acid with that window by reducing it.

Our Takeaway

Attribution is extremely important when it comes to incrementality on our paid media channel campaigns. The MMPs play a key role here, by determining the attribution to the right media sources (or organic) according to the attribution model rules that we set up.

There is not a single rule that works for every case. It always depends on the app’s nature, the user’s behavior, and our hypothesis on how a click view or click can really contribute to getting a user to install an app after some hours or days.

The most important thing here is that we can customize the rules, according to what we trust, and so look for more realistic and incremental results that will be the base of our further decisions.

Finally, it is very important to mention that aligning the MMP attribution windows with the ones in the Self-reporting networks media sources is essential so we don’t have discrepancies between both reports and data.

If you don’t know exactly how attribution models could impact and affect your incrementality and results, and you would like to know more about it, feel free to reach out!